1. Introduction of stereo vision technology

Purpose of this site

Instead of assigning a positive parallax (the value of how many pixels the corresponding point is in the left image) to the pixels of the right image (pixel-disparity model), assign a depth number to the gaze line that extends far from the center of both eyes. I would like to introduce a high-precision stereo vision technology that can make full use of the ability of graph cutting by the new concept of assigning (gaze_line-depth model).

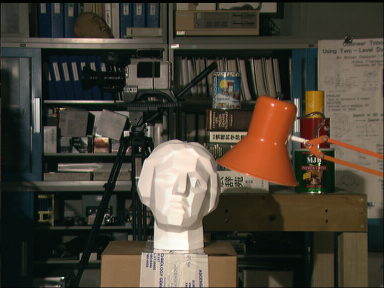

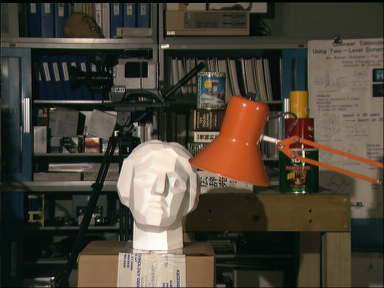

This animation is a three-dimensional image restored from two left and right camera images of the TSUKUBA image, which is famous as a stereo vision benchmark.

Feature 1: Stereo vision that does not try to find corresponding points

Stereo vision, also known as stereo matching, has been thought of as a technique to find out which part of the left and right images corresponds. This is a new stereo vision technology that abandons this idea and uses space as the object of processing from the beginning. The next video shows the new stereo vision system operating in real time.

Feature 2: High-speed processing by hierarchical approximate GPU graph cut

Implemented Wave-Front-Fetch method and Push-Relabel method graph cut by GPU. This graph cut is about 20 times faster than BK algorithm (Boykov-Kolmogorov algorithm) , which is considered to be the fastest in the vision field. The hierarchical approximation process is 10 times faster. Wave-Front-Fetch graph cut was originally devised, and high performance can be obtained when used in the first half of the graph cut. The first of the following two videos shows that the resolution of the central part is high. The latter one shows the case of moving the camera.

Feature 3: Two inexpensive webcams are OK

Stereo rectification is performed using scenery that performs stereo processing without using a calibration board. For this reason, two inexpensive webcams can be used as stereo cameras. The next video shows the calibration.

Self-introduction

My name is Kiyoshi Oguri, emeritus professor at Nagasaki University. Until March 2017, I was a professor in the Department of Computer Science and Engineering at Nagasaki University, but now I am retiring and live my own life. The original specialty was computer design and design methods. SFL (Structured Function description Language) Is a hardware description language that I used to research and develop. In these 7 and 8 years, I have been interested in stereo vision and sound source localization. I proposed the dynamic reconfigurable architecture PCA (Plastic Cell Architecture) long ago, but now that there is an overwhelming usage such as making a CPU with this, the architect started to think that the architect should know the application. And sound source localization. Any application was fine, but the most interesting were stereo vision and sound source localization. The style of conducting a proper survey is not interesting, so for the time being I have been studying my own way of thinking, so it seems that something quite different was made when compared after it was completed. This part of the stereo vision that I developed seems to be a little better than the OpenCV sample, so I would like to introduce ideas and source code here. The basic idea is written in the paper "Stereovision without the concept of correspondence" of CVIM workshop of IPSJ. I think that this title was more appropriate for "Stereovision that doesn't try to find a correspondence". I open a "Private Stereo Vision Laboratory" at home and live a life of research.